Babies understand cost-reward tradeoffs behind others’ actions, study says

Harvard-MIT research may provide data to improve computational models for artificial intelligence and machine learning

Watching someone reach for the first cup of coffee in the morning involves more than seeing a hand reach for a mug. Observers can tell that the love of coffee helps motivate the person to get up; we understand that there’s a certain amount of effort, or cost, that goes into the physical process of reaching; and if we saw the drinker exert more effort to find a cup of coffee than a cup of tea, we might conclude that the drinker prefers coffee.

In a new Science paper, a team of researchers at Harvard University and Massachusetts Institute of Technology (MIT) have discovered that children as young as 10 months old implicitly understand some of the hidden motivations that influence people’s actions. They determine how much something is worth to a person by how hard that person is willing to work for it.

“Far from the blooming, buzzing confusion that William James described,” said Harvard graduate student and lead author Shari Liu, “infants interpret people’s actions in terms of the effort they expend in producing those actions, and also the value of the goals those actions achieve.”

In many ways, studies of infants reveal deep commonalities in the ways that people think throughout their lives, suggested senior author Elizabeth Spelke, the Marshall L. Berkman Professor of Psychology at Harvard. “Abstract, interrelated concepts like cost and value — concepts at the center both of our intuitive psychology and of utility theory in philosophy and economics — may originate in an early-emerging system by which infants understand other people’s actions.”

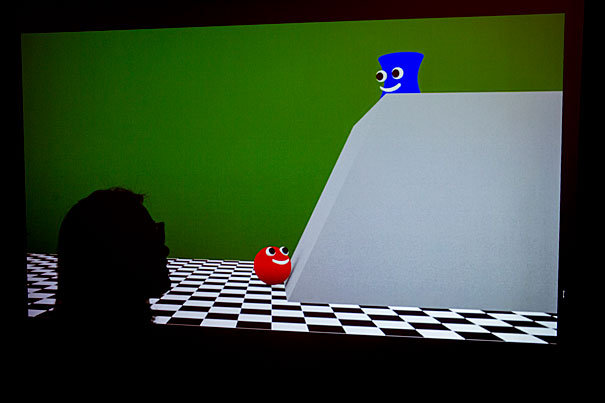

The researchers presented infants with different actions taken by an animated character toward one of two objects. In the first experiment, the character jumped a small barrier and refused to jump a medium barrier to reach one object, and jumped the medium barrier but refused a tall barrier to reach the other object. Infants then were tested with events in which both objects were present with no barriers, the character stood between them, and chose to approach each of the objects on alternating trials. Infants looked longer when the character approached the object for which it had previously taken a less-costly action. This suggests that infants expected the character to prefer the object that it had worked harder to reach.

In further studies, infants formed similar expectations when a character climbed a steeper hill or jumped a wider gap to get to one goal, even though the perceptual properties of these actions differed.

“This makes intuitive sense to us as adults,” explained Liu. “If you see your friend travel across the street to get a cup of coffee, and decline the same action for a cup of tea, you might later be surprised when your friend reaches for tea over coffee when both are right there in front of them.”

These studies of “action understanding” build on years of psychological research probing how children perceive the world and discern the causes of events. Past research has shown that preverbal children look longer at things that they find less probable or surprising.

“Although babies in the first year of life can’t yet tell us what they think, and can’t yet perform many actions themselves, they’re really curious,” said Liu. “One way that they explore the world is by looking at it and by making decisions about what to pay attention to, and what to look away from so that they can learn about something else.”

Predicting how infants think about other people’s thoughts is also part of a larger project of developing computational models for human cognition, machine learning, and artificial intelligence, models that aim to shed light on the nature of human intelligence and to guide the development of machines that think more like people do.

“If we can understand in engineering terms the intuitive theories that even these young infants seem to have, that hopefully would be the basis for building machines that have more human-like intelligence,” said paper author Josh Tenenbaum, a professor in MIT’s Department of Brain and Cognitive Sciences and a director at the MIT–Harvard Center for Brains, Minds, and Machines.

Tomer Ullman, a postdoc at the center and another of the paper’s authors, captured the study’s finding in a computational model. Building on previous computer models, such as those used by recent MIT Ph.D. Julian Jara-Ettinger to study cost-benefit tradeoffs in preschoolers, it assumed that people plan their actions to achieve maximal rewards at minimal cost, and that when others observe an action, they reason about the costs and rewards in the mind of the person who took it.

The current research showed that infants, too, make sense of the actions that people undertake or forego by inferring the cost of each action and the value of the goal it would produce.

“Popular models in machine learning propose that machines, and perhaps humans, can best be made intelligent if they are fed massive amounts of data from which they can learn the myriad features that distinguish one set of goals or preferences from another,” Ullman explains.

“In these experiments, however, infants distinguished between the preferences of an actor even though no simple perceptual cues, either in the environment or in the action itself, distinguished the more-valued object. A computational model that actually understands this level of planning — mental states, desire, costs, and efforts — is richer and more structured than a model that relies only on associating statistical regularities.”

The next steps include asking whether, in reasoning about people’s choices of actions, infants account for the probability that an action plan will fail and whether they infer what an actor values not only from physical costs but also from the psychological costs of actions that are risky or aversive. Further questions concern the relation of infants’ grasp of intuitive psychology to their grasp of intuitive physics, such as whether infants can harness their understanding of people’s actions to learn more about the physical world, or, conversely, if they can learn more about people’s plans and goals from leveraging their knowledge of the objects on which people act.

“Ten-month-old infants devote a lot of time to exploring both the properties of the physical world and their own action capacities. When they push something heavy and set it in motion, they may learn something both about the experiences that accompany their own actions and about the properties of the things they act on,” said Spelke. “We want to be looking more at the interaction of physics and psychology in these situations.”

This research was funded by the Center for Brains, Minds, and Machines and the National Science Foundation.