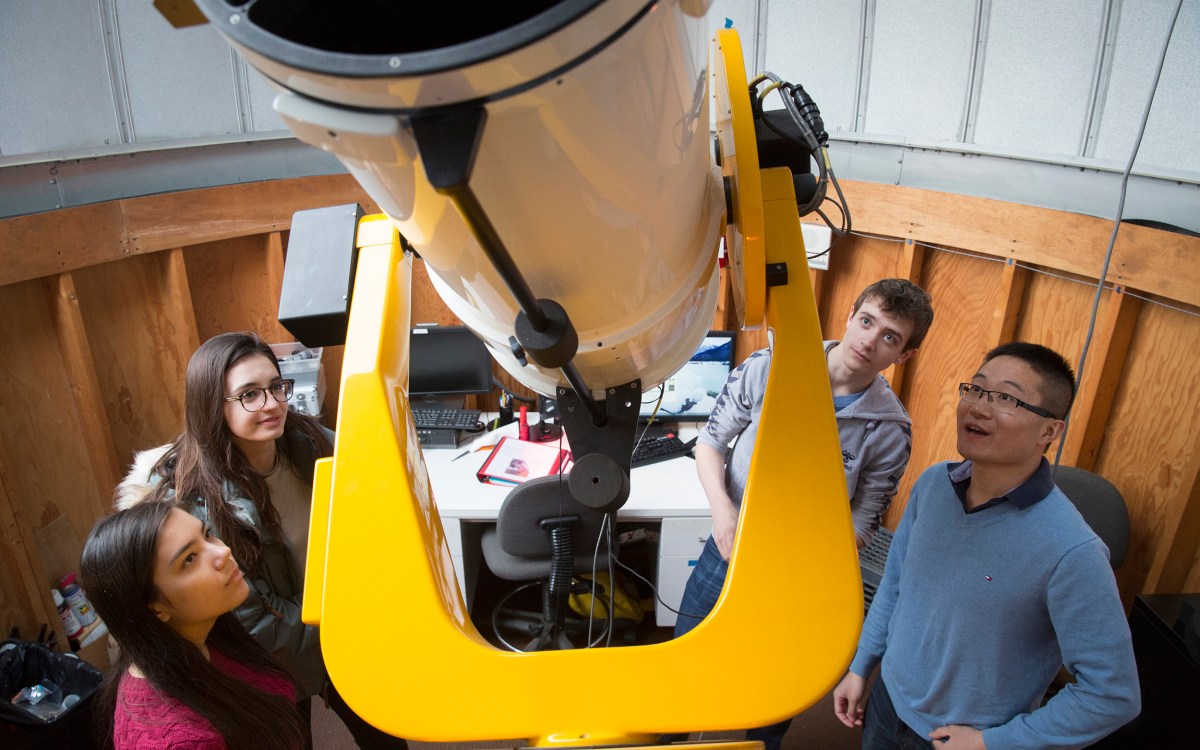

Astronomy Lab Manager Allyson Bieryla has worked to make reaching the cosmos accessible to all through technology.

Kris Snibbe/Harvard Staff Photographer

Astronomy Lab sees the light — and wants everyone else to, too

Accessibility devices use sound to allow the visually impaired to envision stars

“I have been here for 11 years, and introducing students to the telescope doesn’t get old,” says Allyson Bieryla, manager of the Astronomy Lab and Clay Telescope. “When someone sees Saturn for the first time through the telescope and shouts, ‘No way. That’s a cartoon. That’s not real. That’s so cool!’ — that instant excitement is contagious. That shouldn’t be denied to anyone.”

Bieryla has, in fact, made the mission of accessibility and inclusivity a top priority, pioneering tools and redesigning space to help people with physical disabilities experience the wonders of astronomy. For instance, the lab uses a tactile printer to create a sort of topographical map of star systems that people can explore with their hands.

“Think of it like Braille,” Bieryla said. “The printer produces heat and, using special heat-sensitive paper, creates images that are raised so a student who can’t see the images can feel them and understand what other students are seeing.”

The printer represented the beginning of bigger efforts. With design help from Harvard science demonstrator Daniel Davis, Bieryla and Sóley Hyman ’19 built and distributed a device they created called LightSound. The devices use simple circuit board technology with sensors that convert light into sound — brighter light translates to higher pitch — to allow those with visual impairments to experience solar eclipses. The efforts of the Harvard team have been an extension of the work of the blind astronomer Wanda Diaz Merced, who pioneered “sonification” to turn data into sound. Much of Merced’s work took place at the Center for Astrophysics in 2014‒15.

The first version of the device, LightSound 1.0, was used for the Great American Eclipse in 2017. For the South American eclipse last month, Hyman and Bieryla redesigned the device as LightSound 2.0, with improved sensitivity and a wider variety of sounds. The lab received a grant from the International Astronomical Union to build and distribute about two dozen LightSounds. Bieryla and Hyman shipped the devices, which cost about $60 each to build, to Chile and Argentina so the visually impaired could experience the July 2 solar eclipse in the Southern Hemisphere.

“The reception of it was very, very positive,” said Hyman, who graduated with a joint concentration in astrophysics and physics and a secondary in music. “It was really moving to hear some of the comments people had, people who were blind from birth describing how profound it was to use the device as the [eclipse] came into totality. It was exciting to see how powerful sonification can be and reminds me of how a symphony can move people emotionally.”

The lab developed a second device called Orchestar that uses a different sensor to translate colored light into sound. It improves on the first by turning different colored lights into different pitches of sound. Blue light, for example, is a higher pitch, and red is a lower one. This is important because the color of a star relates to its temperature, so the Orchestar can be used to help the visually impaired understand the differences between stars. Both devices can interface with computers to collect and analyze data, and the lab has put assembly instructions for both devices, including computer code, online so anyone can build one.

“Data is data, whether you plot the numbers visually or plot the numbers with sound. You are tracking the same information,” Bieryla said. “We also know that your ears can be more sensitive than your eyes, so sometimes you can pick out subtleties in the data with your ears that you might miss with your eyes.”

“Sonification isn’t just a tool for the visually impaired, but for anyone to analyze data,’’ Hyman added.