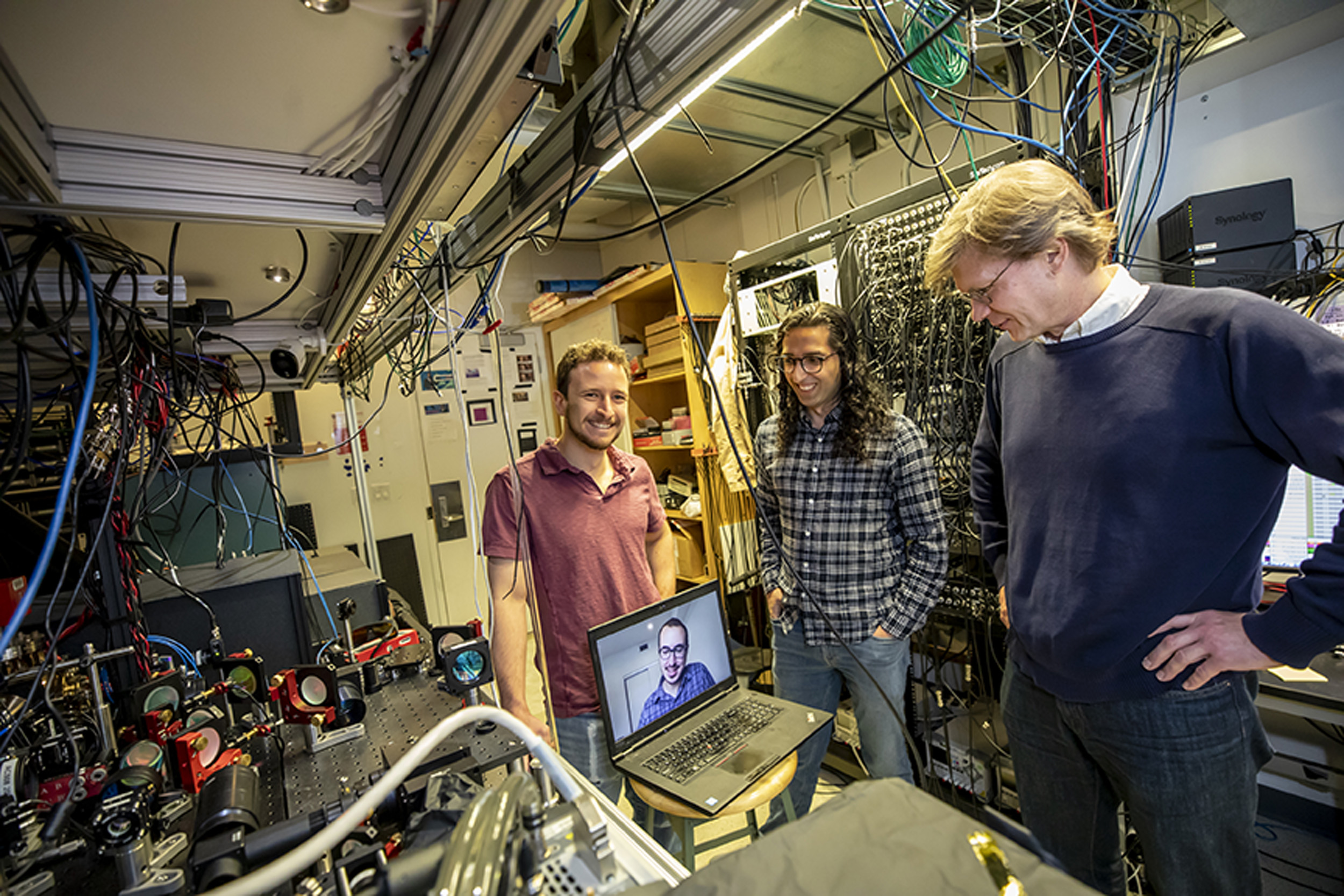

Dolev Bluvstein (from left), Harry Levine (on the laptop), Sepehr Ebadi, and Mikhail Lukin have created a new method for shuttling entangled atoms in a quantum processor at the forefront for building large-scale programmable quantum machines.

Rose Lincoln/Harvard Staff Photographer

New approach may help clear hurdle to large-scale quantum computing

Harvard-led team gets around error problems by moving, connecting atoms in mid-computation

Building a plane while flying it isn’t typically a goal for most, but for a team of Harvard-led physicists that general idea might be a key to finally building large-scale quantum computers.

Described in a new paper in Nature, the research team, which includes collaborators from QuEra Computing, MIT, and the University of Innsbruck, developed a new approach for processing quantum information that allows them to dynamically change the layout of atoms in their system by moving and connecting them with each other in the midst of computation.

This ability to shuffle the qubits (the fundamental building blocks of quantum computers and the source of their massive processing power) during the computation process while preserving their quantum state dramatically expands processing capabilities and allows for self-correction of errors. Clearing this hurdle marks a major step toward building large-scale machines that leverage the bizarre characteristics of quantum mechanics and promise to bring about real-world breakthroughs in material science, communication technologies, finance, and many other fields.

“The reason why building large-scale quantum computers is hard is because eventually you have errors,” said Mikhail Lukin, the George Vasmer Leverett Professor of Physics, co-director of the Harvard Quantum Initiative, and one of the senior authors of the study. “One way to reduce these errors is to just make your qubits better and better, but another more systematic and ultimately practical way is to do something which is called quantum error correction. That means that even if you have some errors, you can correct these errors during your computation process with redundancy.”

The team developed a new method where any qubit can connect to any other qubit on demand. In this context, two atoms become linked and able to exchange information regardless of distance. This phenomenon is what makes quantum computers so powerful.

Credit: Lukin Group

In classical computing, error correction is done by simply copying information from a single binary digit or bit so it’s clear when and where it failed. For example, one single bit of 0 can be copied three times to read 000. When it suddenly reads 001, it’s clear where the error is and it can be corrected. A foundational limitation of quantum mechanics is that information can’t be copied, making error correction difficult.

The workaround the researchers implement creates a sort of backup system for the atoms and their information called a quantum error correction code. The researchers use their new technique to create many of these correction codes, including what’s known as a toric code, and it spreads them out throughout the system.

“The key idea is we want to take a single qubit of information and spread it as nonlocally as possible across many qubits, so that if any single one of these qubits fails it doesn’t actually affect the entire state that much,” said Dolev Bluvstein, a graduate student in the Physics Department from the Lukin group who led this work.

What makes this approach possible is that the team developed a new method where any qubit can connect to any other qubit on demand. This happens through entanglement or what Einstein called “spooky action at a distance.” In this context, two atoms become linked and able to exchange information no matter how far apart they are. This phenomenon is what makes quantum computers so powerful.

“This entanglement can store and process an exponentially large amount of information,” Bluvstein said.

The new work builds upon the programmable quantum simulator the lab has been developing since 2017. The researchers added new capabilities to it to allow them to move entangled atoms without losing their quantum state and while they are operating.

Previous research in quantum systems showed that once the computation process starts, the atoms, or qubits, are stuck in their positions and only interact with qubits nearby, limiting the kinds of quantum computations and simulations that can be done between them.

The key is that the researchers can create and store information in what are known as hyperfine qubits. The quantum state of these more robust qubits lasts significantly longer than regular qubits in their system (several seconds versus microseconds). It gives them the time they need to entangle them with other qubits, even far-away ones, so they can create complex states of entangled atoms.

The entire process looks like this: The researchers do an initial pairing of qubits, pulse a global laser from their system to create a quantum gate that entangles these pairs, and then stores the information of the pair in the hyperfine qubits. Then, using a two-dimensional array of individually focused laser beams called optical tweezers, they move these qubits into new pairs with other atoms in the system to entangle them as well. They repeat the steps in whatever pattern they want to create different kinds of quantum circuits to perform different algorithms. Eventually, the atoms all become connected in a so-called cluster state and are spread out enough to act as backups for each other in case of an error.

Already, Bluvstein and his colleagues have used this architecture to generate a programmable, error-correcting quantum computer operating at 24 qubits, and they plan to scale up from there. The system has become the basis for their vision of a quantum processor.

“In the very near term, we basically can start using this new method as a kind of sandbox where we will really start developing practical methods for error correction and exploring quantum algorithms,” Lukin said. “Right now [in terms of getting to large-scale, useful quantum computers], I would say we have climbed the mountain enough to see where the top is and can now actually see a path from where we are to the highest top.”

This work was supported by the Center for Ultracold Atoms, the National Science Foundation, the Vannevar Bush Faculty Fellowship, the U.S. Department of Energy Quantum Systems Accelerator, the Office of Naval Research, the Army Research Office MURI, and the DARPA ONISQ program.