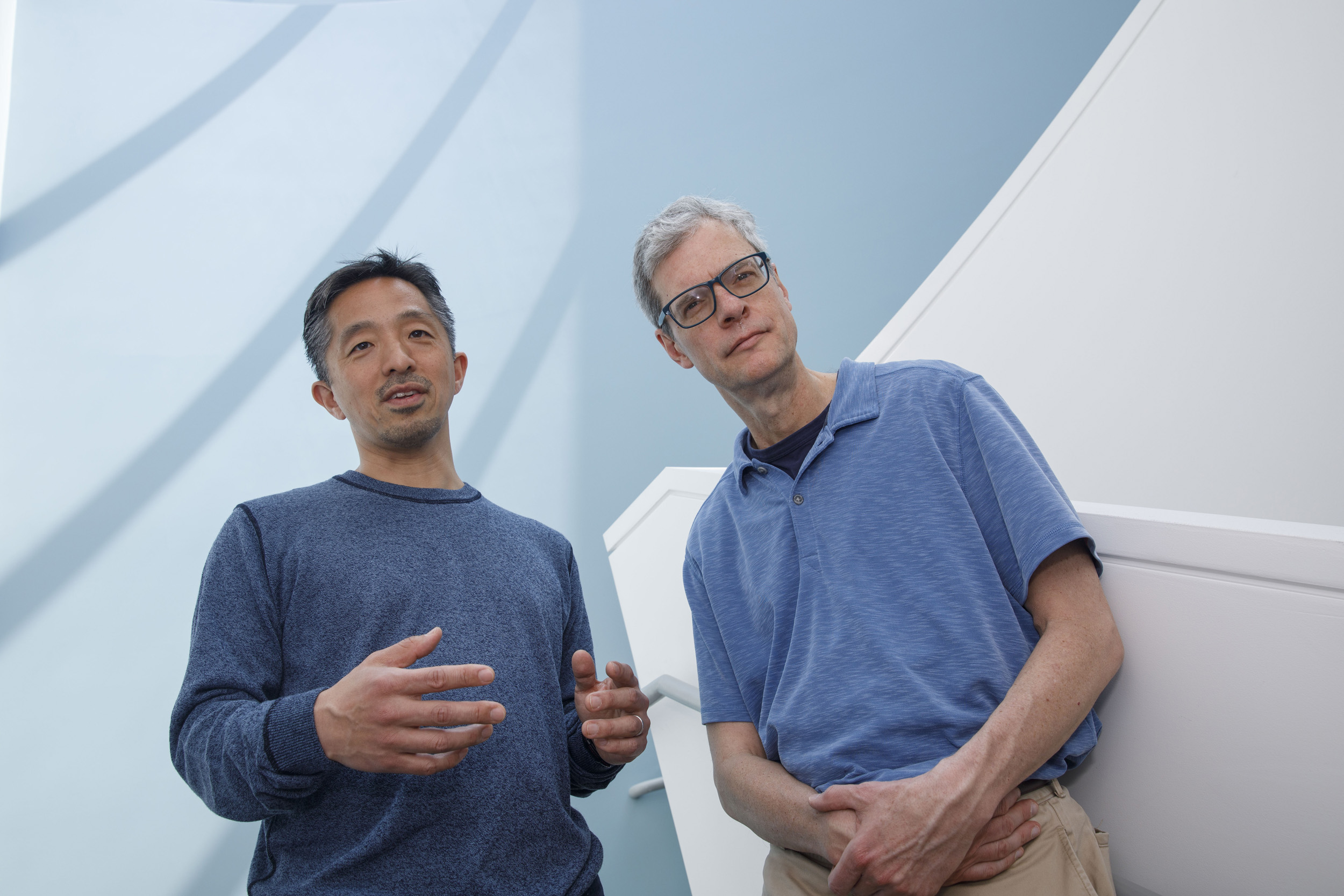

Study co-authors Kosuke Imai (left) and James Greiner.

Kris Snibbe/Harvard Staff Photographer

Does AI help humans make better decisions?

One judge’s track record — with and without algorithm — surprises researchers

Should artificial intelligence be used to improve decision-making in the court of law? According to a new working paper, not only does one example of an AI algorithm fail to improve the accuracy of judicial calls, on its own the technology fares worse than humans.

“A lot of researchers have focused on whether the algorithm has a bias or AI has a bias,” noted co-author Kosuke Imai, professor of government and statistics. “What they haven’t really looked at is how the use of AI affects the human decision.”

While several sectors, including criminal justice, medicine, and even business, use AI recommendations, humans are typically the final decision-makers. The researchers took this into account by comparing criminal bail decisions made by a single judge with recommendations generated by an AI system. Specifically analyzed was AI’s influence on whether cash bail should be imposed.

30% — percentage of cases in which the judge rejected AI recommendations

The randomized controlled trial was conducted in Dane County, Wisconsin, focusing on whether arrestees were released on their own recognizance or subjected to cash bail. The researchers — led by Imai and Jim Greiner, the Honorable S. William Green Professor of Public Law at Harvard Law School — set their sights on hearings held by a single judge over a 30-month period, between the middle of 2017 and the end of 2019. Also analyzed were arrest data on defendants for up to 24 months later.

Results showed that AI alone performed worse than the judge in predicting reoffenders — in this case, by imposing the tighter restriction of cash bail. At the same time, little to no difference was found between the accuracy of human-alone and AI-assisted decision-making. The judge went against AI recommendations in slightly more than 30 percent of cases.

“I was surprised by this,” Greiner said. “Given the evidence that we’ve cited that algorithms can sometimes outperform human decisions, it looked as though what happened is that this algorithm had been set to be too harsh. It was over-predicting that the arrestees would misbehave, predicting that they would do so too often, and, therefore, recommending measures that were too harsh.”

This issue could be fixed by recalibrating the algorithm, the professors argued.

“It’s a lot easier to understand and then fix the algorithm or AI than the human,” Imai said. “It’s a lot harder to change the human or understand why humans make their decisions.”

“The advantage of AI or an algorithm is that it can be made transparent.”

Kosuke Imai

The AI studied here did not specifically account for race, instead focusing on age and nine factors related to past criminal experience. Imai, an expert on deploying statistical modeling to call out racial gerrymandering, attributed inequities concerning cash bail to a variety of societal factors, particularly relating to criminal history.

He acknowledged that the study’s findings may be cause for concern, but he noted that people are biased as well. “The advantage of AI or an algorithm is that it can be made transparent,” he said. The key is to have open-source AI that is readily available for empirical evaluation and analysis.

The way the criminal justice system is currently using AI as well as unguided human decisions should be studied with an eye to making improvements, Greiner added. “I don’t know whether this is comforting,” he offered, “but my reaction for folks who are afraid or skeptical of AI is to be afraid and skeptical of AI, but to be potentially more afraid or skeptical of unguided human decisions.” He added that the way the criminal justice system is currently using AI as well as unguided human decisions should be studied to make improvements.

The paper’s other co-authors were Eli Ben-Michael, assistant professor of statistics and data science at Carnegie Mellon University; Zhichao Jiang, professor of mathematics at Sun Tay-sen University in China; Melody Huang, a postdoctoral researcher at the Wojcicki Troper Harvard Data Science Institute, and Sooahn Shin, a Ph.D. candidate in government at the Kenneth C. Griffin Graduate School of Arts and Sciences.