Bence Ölveczky.

Niles Singer/Harvard Staff Photographer

Want to make robots more agile? Take a lesson from a rat.

Scientists create realistic virtual rodent with digital neural network to study how brain controls complex, coordinated movement

The effortless agility with which humans and animals move is an evolutionary marvel that no robot has yet been able to closely emulate. To help probe the mystery of how brains control and coordinate it all, Harvard neuroscientists have created a virtual rat with an artificial brain that can move around just like a real rodent.

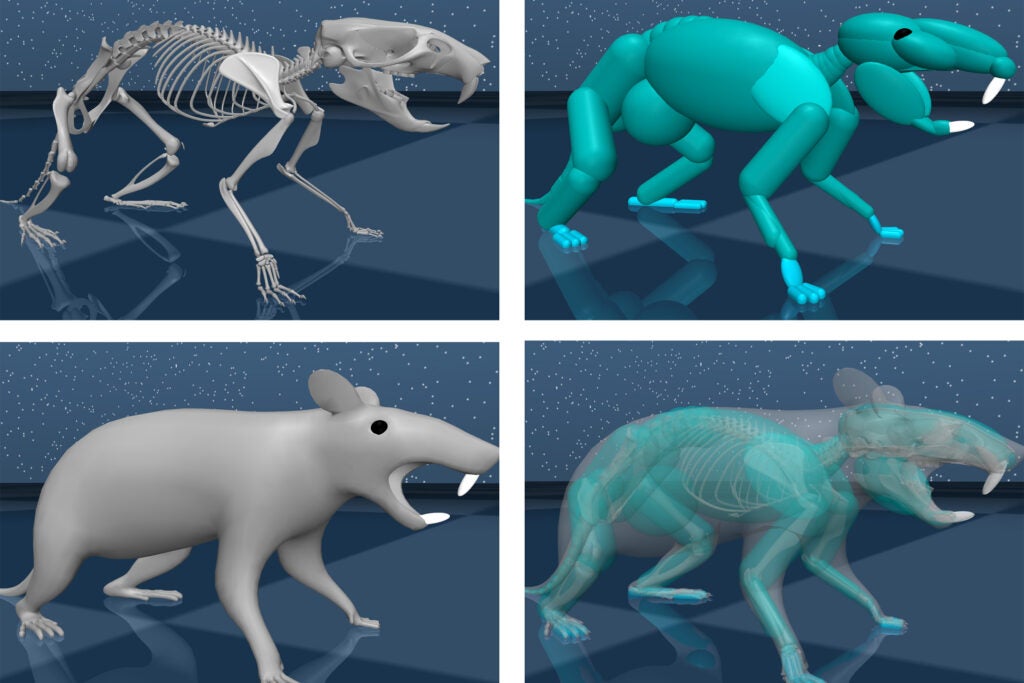

Bence Ölveczky, professor in the Department of Organismic and Evolutionary Biology, led a group of researchers who collaborated with scientists at Google’s DeepMind AI lab to build a biomechanically realistic digital model of a rat. Using high-resolution data recorded from real rats, they trained an artificial neural network — the virtual rat’s “brain” — to control the virtual body in a physics simulator called MuJoco, where gravity and other forces are present. And the results are promising.

Harvard and Google researchers created a virtual rat using movement data recorded from real rats.

Credit: Google DeepMind

Published in Nature, the researchers found that activations in the virtual control network accurately predicted neural activity measured from the brains of real rats producing the same behaviors, said Ölveczky, who is an expert at training (real) rats to learn complex behaviors in order to study their neural circuitry. The feat represents a new approach to studying how the brain controls movement, Ölveczky said, by leveraging advances in deep reinforcement learning and AI, as well as 3D movement-tracking in freely behaving animals.

The collaboration was “fantastic,” Ölveczky said. “DeepMind had developed a pipeline to train biomechanical agents to move around complex environments. We simply didn’t have the resources to run simulations like those, to train these networks.”

Working with the Harvard researchers was, likewise, “a really exciting opportunity for us,” said co-author and Google DeepMind Senior Director of Research Matthew Botvinick. “We’ve learned a huge amount from the challenge of building embodied agents: AI systems that not only have to think intelligently, but also have to translate that thinking into physical action in a complex environment. It seemed plausible that taking this same approach in a neuroscience context might be useful for providing insights in both behavior and brain function.”

Graduate student Diego Aldarondo worked closely with DeepMind researchers to train the artificial neural network to implement what are called inverse dynamics models, which scientists believe our brains use to guide movement. When we reach for a cup of coffee, for example, our brain quickly calculates the trajectory our arm should follow and translates this into motor commands. Similarly, based on data from actual rats, the network was fed a reference trajectory of the desired movement and learned to produce the forces to generate it. This allowed the virtual rat to imitate a diverse range of behaviors, even ones it hadn’t been explicitly trained on.

These simulations may launch an untapped area of virtual neuroscience in which AI-simulated animals, trained to behave like real ones, provide convenient and fully transparent models for studying neural circuits, and even how such circuits are compromised in disease. While Ölveczky’s lab is interested in fundamental questions about how the brain works, the platform could be used, as one example, to engineer better robotic control systems.

A next step might be to give the virtual animal autonomy to solve tasks akin to those encountered by real rats. “From our experiments, we have a lot of ideas about how such tasks are solved, and how the learning algorithms that underlie the acquisition of skilled behaviors are implemented,” Ölveczky continued. “We want to start using the virtual rats to test these ideas and help advance our understanding of how real brains generate complex behavior.”

This research received financial support from the National Institutes of Health.